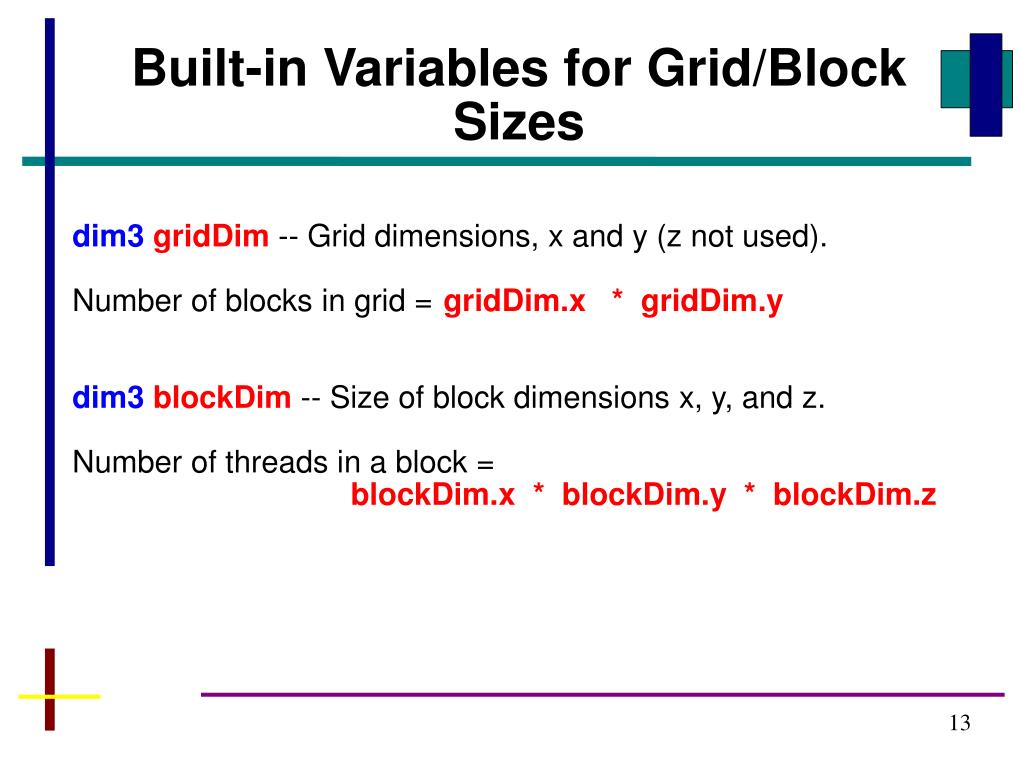

While the numBlocks variable was declared as an int that declares in how many blocks you want to run the code in. It uses the spdcial type dim3 that CUDA provides to indicate a type of three dimensions. The threadsPerBlock() syntax takes three arguments for the x, y and z dimensions respectively.

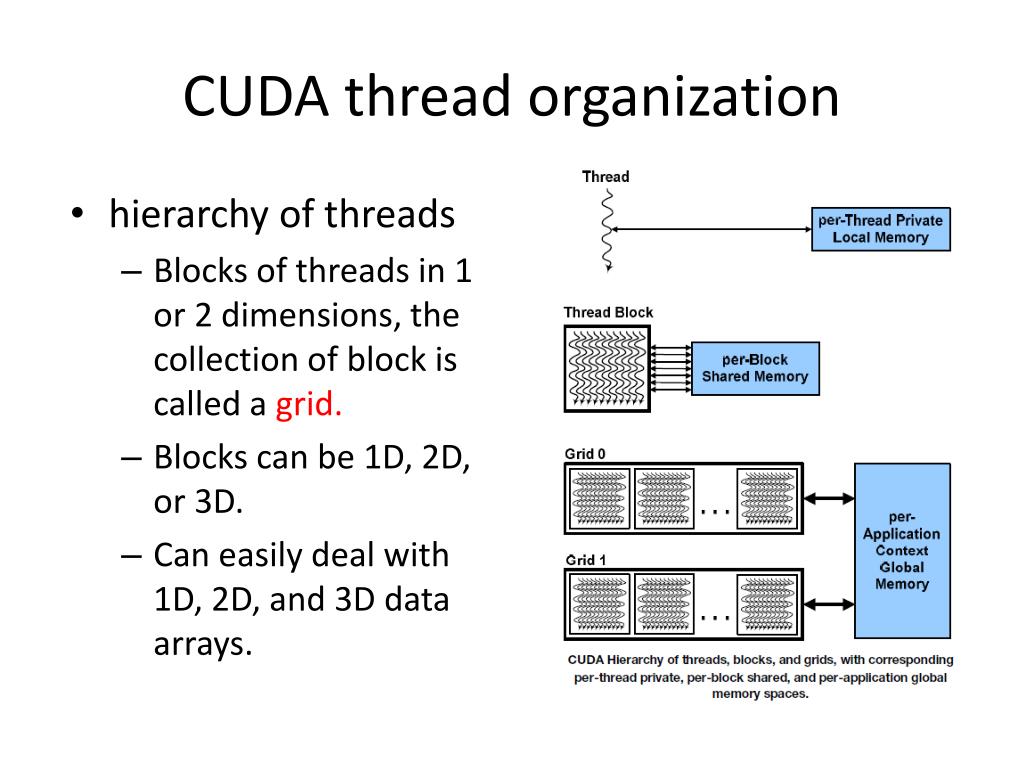

The thread's dimensions can also take a z- axis which enables you to naturally process 3D arrays and other material. It is also useful for working on two-dimensional arrays in general. This is mainly to make it convenient for people who work on two-dimensional graphics so that they don't have to construct the x- and y- coordinates by hand. I said N-by-N because threads can have an x-index and a y-index. This above snippet runs N-by-N copies of the same function. */ _global_ void MatAdd ( float A, float B, float C ) Įnter fullscreen mode Exit fullscreen mode

* This is a global function that can be called from C land

If we go to the Google Code link mentioned in the quote, then we find a function that uses _device_: You can also visit the following link:, it was useful for me. The other is executed on the device and can only be called from a device or kernel function. You can also "overload" a function, e.g : you can declare void foo(void) and device foo (void), then one is executed on the host and can only be called from a host function. Therefore, you call device functions from kernels functions, and you don't have to set the kernel settings. Global functions can be called from the host, and it is executed in the device. Want to know more about the difference between using _global_ and _device_? Look no further, because Stack Overflow user FacundoGFlores explained the difference very well in this answer which I shall quote below:ĭifferences between device and global functions are:ĭevice functions can be called only from the device, and it is executed only in the device. Generally it is more efficient to separate CPU-bound and GPU-bound code because you will then be able to optimize the GPU code separately. And finally, using _host_ _device_ together enables functions to run on both the CPU and GPU. Equivalent to not using any keyword at all, this defines a plain-old C/C++ function. One copy of global memory-bound variables exist per GPU. When you use _device_ before a variable, you are making a variable that exists in what's capled the global memory space of the GPU, which can be accessed directly from threads in all blocks, and is accessible to threads in all blocks and the host using special CUDA functions that can copy variables to different places (these are latency-heavy operations though, so they should only be used suring times when performence is not necessary, such as the beginning and end of a program). Sometimes you will equivalently see _host_, but this is an optional keyword. It is after all supposed to run on the CPU so having a mandatory qualifier at the beginning when the rest of the internet is compiling without any at all doesn't make much sense. You can tell the two of them apart by looking at the function signatures device code has the _global_ or _device_ keyword at the beginning of the function, while host code has no such qualifier. Since a CUDA program is running code on both the CPU and the GPU, there's got to be a way for the developer and the program itself to identify which functions are supposed to run on the CPU and which run on the GPU.ĬUDA calls code that is slated to run on the CPU host code, and functions that are bound for the GPU device code. Today I will now show you the most important features of CUDA programs, threads, blocks and host and device code with examples straight from the CUDA Toolkit documentation itself, Stack Overflow, and other places. In my last post I gave an overview of differences in the way GPUs execute code from a CPU, and how an NVIDIA GPU compiles down CUDA code into an intermediate assembly language called PTX before it assembles them into binaries. I have a program that I using thrust:: vector in.

0 kommentar(er)

0 kommentar(er)